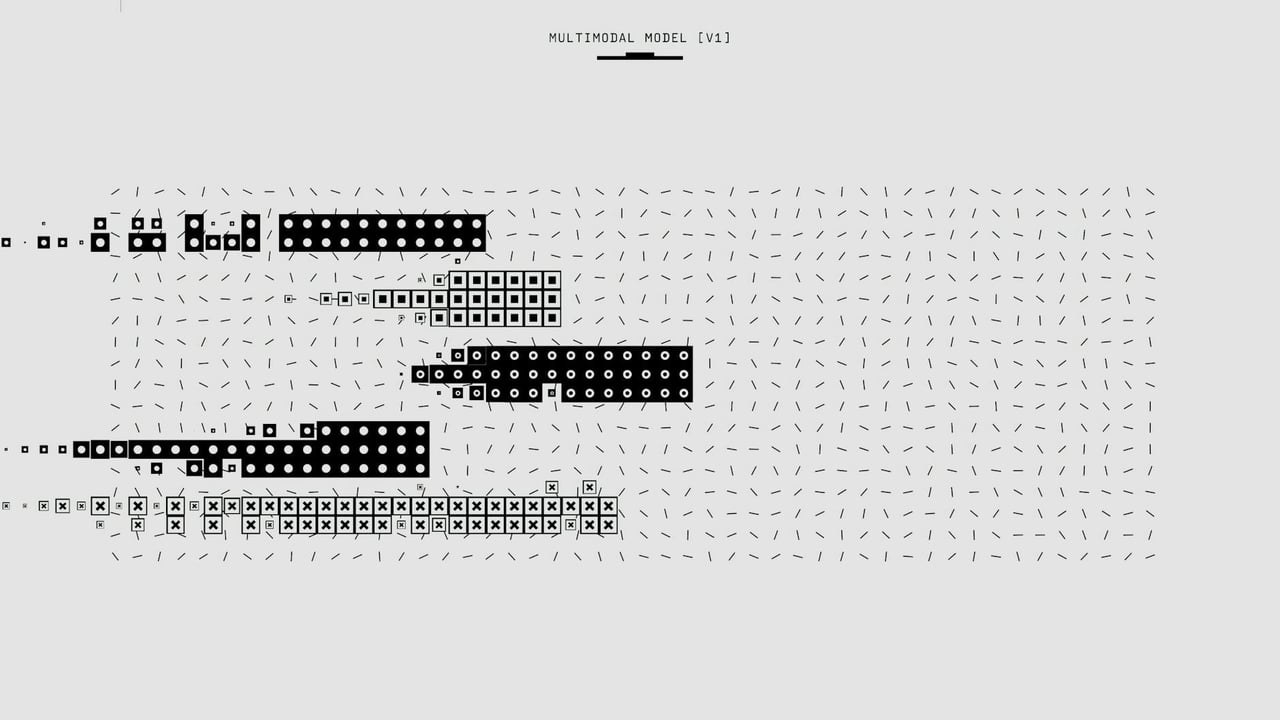

On January 20, Chinese start‑up Jieyue Xingchen (阶跃星辰) publicly open‑sourced a multimodal model named Step3‑VL‑10B and said the 10‑billion‑parameter system reaches state‑of‑the‑art performance on a suite of tests covering visual perception, logical reasoning, mathematics competitions and general dialogue. The announcement, posted on a domestic social platform, is short on technical detail but blunt in its claim: comparable accuracy to same‑scale leaders despite a relatively small parameter count.

The significance lies in the model’s size and scope. In an era where headline AI achievements are often measured in hundreds of billions of parameters and massive compute budgets, a 10B model that competes on vision‑language benchmarks suggests efficiency gains in architecture, pretraining data, or fine‑tuning. Smaller models are cheaper to run, easier to host at the edge or on commodity cloud instances, and quicker for researchers and developers to experiment with — which multiplies their practical value beyond raw leaderboard positions.

Step3‑VL‑10B’s purported strengths — visual understanding, reasoning and math problem solving — match the priorities of many research groups chasing truly multimodal intelligence rather than purely text systems. If the performance claims hold up under external replication, the release will accelerate research and productisation in China’s AI ecosystem by lowering the barrier to entry for experimentation with capable vision‑language agents.

Open‑sourcing also has geopolitical and commercial implications. Democratised models foster community scrutiny, faster iteration and widespread adoption by startups, academic labs and hobbyists. They can, however, also increase proliferation risks and present new vectors for misuse if safety measures are not well documented. For overseas observers, the move underscores how Chinese teams are converging on the same open innovation strategies used by Western labs while emphasizing efficiency and deployability.

The technical community will look for more specifics: training data composition, compute used, benchmark names and methodology, architectural innovations and safety evaluations. Without those details, evaluating Step3‑VL‑10B’s robustness, generalisability and limitations will require independent testing. The immediate practical outcome is clear, though: more accessible, capable multimodal models circulating inside China’s developer ecosystem.

Whether this release marks a step change or a marginal efficiency improvement will depend on follow‑up from independent researchers and on how Jieyue Xingchen supports the project — with documentation, model weights, evaluation suites and guardrails. Either way, the announcement reinforces two trends shaping the near‑term AI landscape: a push for multimodality and a race to build smaller, cheaper models that can perform tasks previously reserved for much larger systems.